DORA Community Blog

Testing All the Way Around the DevOps Loop

Lisa Crispin, an independent consultant, author and speaker, kicked off a community discussion on Testing.

Continue reading...

Latest Posts

Testing All the Way Around the DevOps Loop

February 22, 2024 by Amanda

Discussion Topic: Testing

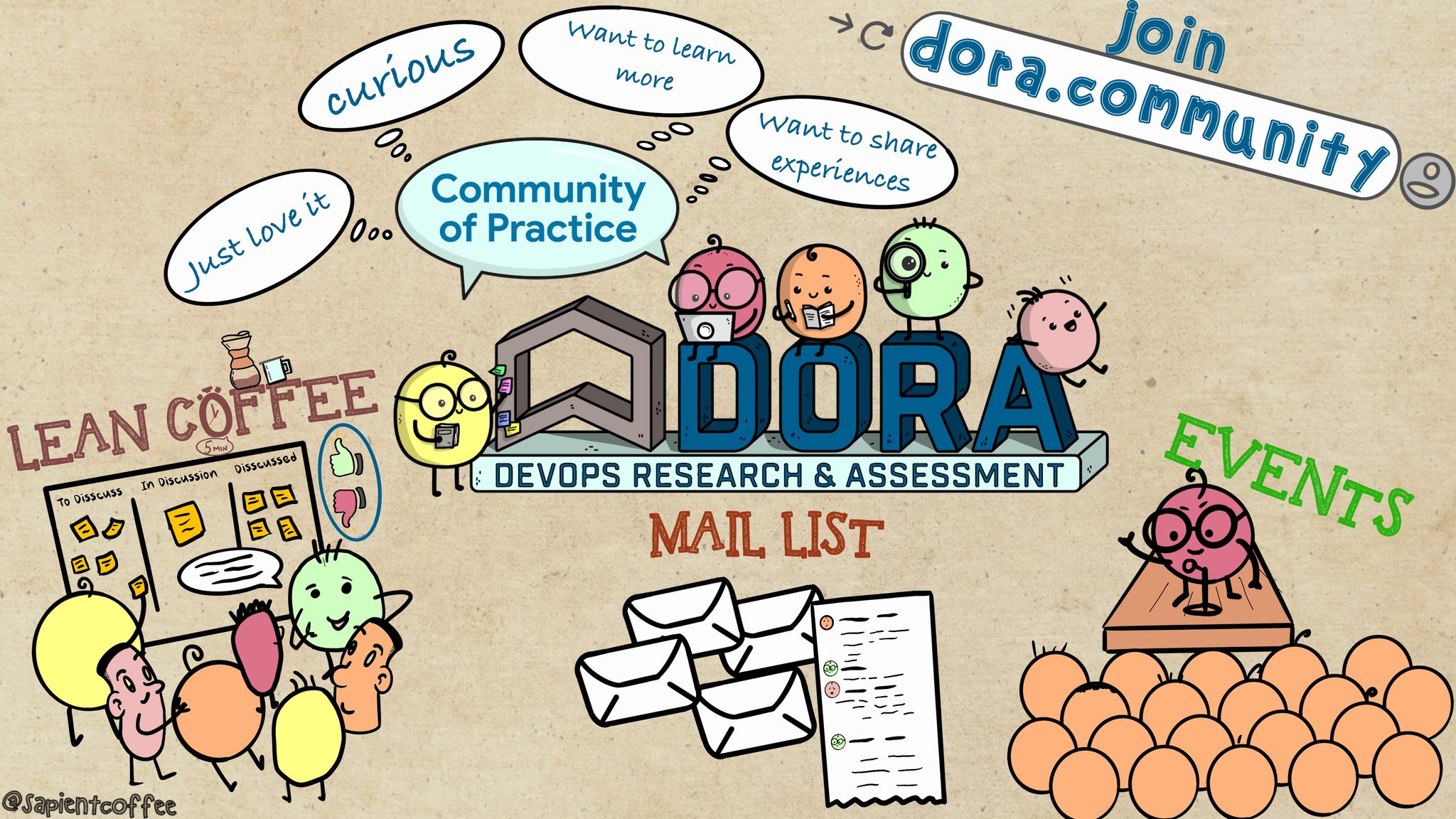

The DORA Community Discussions start with a brief introduction talk, and then a discussion with the attendees using the Lean coffee.

Topics discussed:

How the DORA metrics can contribute with quality?

Recap from the community discussion:

- DORA metrics focus on process. Lead time, deployment frequency, etc. primarily reveal process efficiency, not product quality.

- DORA metrics can indirectly influence quality. A smooth process facilitates the focus needed to improve product quality.

- Look for product-oriented metrics. Consider deployment failure rate to give a high-level picture of quality or supplement DORA with quality-specific metrics.

What about teams that do not have any testing specialists?

Recap from the community discussion:

- Emphasizes the importance of focusing on the outcomes that the team is trying to achieve, rather than just the tasks that need to be completed.

- Continuous learning is key. Teams should invest time in understanding different testing techniques to compensate for a lack of specialists.

When testing is everywhere, what is the role of specialized testers?

Recap from the community discussion:

- Testers become quality coaches. They focus on teaching, mentoring, and improving the team's overall quality mindset.

- Specialists bring new perspective. Their focus on strategy and the "big picture" of testing elevates the whole team.

- Testers can unblock teams. They help with tasks outside of direct testing, bringing different strengths to the team.

How can we improve quality through observability?

Recap from the community discussion:

- Observability tools can assist in identifying and diagnosing production issues, enabling teams to learn from and prevent future problems.

- The use of metrics and tracking to identify areas for improvement was suggested.

How does non-functional testing fit in (scale, security, DR)? Does it get done with every iteration?

Recap from the community discussion:

- Quality attributes mindset. Avoid the vague term "non-functional," focus on specific attributes like reliability and scalability.

- Use visual models for planning, ...like the Holistic Testing Model, to ensure you don't miss important areas.

- Make time for it. Consciously plan for testing non-functional aspects as early as possible. Leverage new tools. CI pipelines and production testing tools make this easier.

- Treat operational requirements like functional features. Prioritize them on the backlog, with dedicated time and resources for testing.

There's an adage along the lines of - when everyone is responsible for something, no one is. How does that translate to everyone in a cross-functional team being responsible for quality?

Recap from the community discussion:

- "Everyone's responsible" can fail. Without clear ownership, testing may get deprioritized.

- Visual models help. Tools like the Agile Testing Quadrants provide a framework to discuss and plan testing coverage.

- Assign clear responsibility. Make testing an explicit task within a development process, like code review. Someone needs to be accountable for quality.

- Consider an enabling team. Some organizations have quality-focused teams that support and set standards for other teams (rather than individual testers within teams).

Open Discussion on Code Review Use Cases

February 8, 2024 by Amanda

Discussion Topic: Code Reviews

The DORA Community Discussions start with a brief introduction talk, and then a discussion with the attendees using the Lean coffee.

Topics discussed:

Suggestions around code review metrics- code review velocity, PR cycle time etc.

Recap from the community discussion:

- The Need for Clear Terminology: The terms "velocity", "code review", and "inspection" are often used inconsistently. Participants agreed on the importance of using specific terms like cycle-time, response-time, or review-rate to improve clarity.

- Metrics: The group emphasized defining metrics based on clear business goals and aligning them with company culture. CHAOSS recommended metrics.

- Potential good metrics: time to merge, iterations, time waiting for a submitter action, how are the reviews distributed across the team, and time waiting for a reviewer action.

- LinkedIn DPH Framework

- Context matters - weekends, holidays, vacations, etc. can affect metrics.

- https://github.com/lowlighter/metrics

Where is the value of code review on a high trust team?

Recap from the community discussion:

- Knowledge sharing across the organization, learning the way others work, make code more homogeneous

- Code review is more valuable when learning is the result

Code Review vs. Automated Testing

Recap from the community discussion:

- Automating Reviews and Testing: using automated testing to streamline review processes, along with reviewing the tests themselves to ensure overall code quality.

- Linters and Auto-Fixing: The value of linters for enforcing style and catching errors was emphasized, along with using auto-fixes within CI pipelines.

- Testing can help save time -- make conversations more valuable: first, check the tests and then ask the more complicated questions

- Adding automation & static analysis into CI pipeline is often called "Continuous Review" (Duvall, 2007). Adding AI into that was getting dubbed as "Continuous Intelligence" ca. 2018 or so (along with "Continuous Compiance")

- Context matters -- age of codebase, team culture, team expertise, industry

- Paul Hammant's blog - The Unit of Work Should Be a Single Commit

- Trust and Test-Driven Development for People

AI replace a human in code review? When, where, and how?

Recap from the community discussion:

- AI vs. Traditional Code Analysis: The potential of AI-powered code reviewing tools was discussed and compared with existing code analysis approaches. It was noted that AI reviewers could go beyond traditional tools, but it's important to see how they function in practice.

- Code Written by AI vs. Code Reviewed by AI: The conversation highlighted the distinction between these two scenarios, with implications for code quality and the role of human developers.

- Be aware of the AI training set

- Human judgement is important

February 2024 Community News

February 1, 2024 by Amanda

Hello! Thank you for all of the wonderful discussions in January! It was exciting to meet several members for the first time. We discussed what teams are focused on in 2024, Metrics, and Applying DORA in your Context. If you missed any of the discussions, you can watch intro presentations, and read event recaps on the DORA.community blog.

We will continue to explore how team’s can improve their code reviews, with a presentation by Daniel Izquierdo Cortázar and community discussion Thursday, February 8th. On February 22nd, we will have a community discussion on testing, the discussion will start with a presentation by Lisa Crispin. If you are looking to connect with fellow DORA community members, this month’s community connection is on February 14th. We will end the month with Metric Monday discussions on February 26th. More information on the events are below.

Are you located in or near Boulder, CO? The DORA Advocacy team will be in Boulder on February 20th. Let us know if you are interested in a meet up by filling out this form.

A special thank you to everyone who has participated in the DORA research, and to everyone that voted for DORA in the 2023 DevOps Dozen Community Awards. The DORA report won!! 🎉 🎉 🎉

What’s New?:

DORA.community Upcoming Events:

Community Connections (45 minutes)

We break into small groups for networking, then spend the last 10 minutes sharing as a larger group.

February 14th: 12:30pm UTC & 21:00 UTC

Community Lean Coffee Discussions (1 hour):

The discussion starts off with a brief presentation, then we use the lean coffee format for the remainder of the discussion.

Open Discussion on Code Review Use Cases - February 8th: 15:00 UTC

Code review is essential in software development nowadays. This brings certain advantages to the organization producing code (it does not matter if this is done in the open or internally as proprietary), such as better knowledge sharing process, faster onboarding of newcomers, make the organization more resilient, or help hunt bugs faster in the software production chain.

This talk will share some public use cases and rationale to do code review with real-life examples for illustration as well as open the discussion for the rest of the hour together.

Speaker: Daniel Izquierdo Cortázar, CEO @ Bitergia, President @ InnerSource Commons Foundation, CHAOSS Governing Board. Daniel Izquierdo Cortázar is a researcher and one of the founders of Bitergia, a company that provides software analytics for open and InnerSource ecosystems. Currently holding the position of Chief Executive Officer, he is focused on the quality of the data, research of new metrics, analysis and studies of interest for Bitergia customers via data mining and processing. Izquierdo Cortázar earned a PhD in free software engineering from the Universidad Rey Juan Carlos in Madrid in 2012 focused on the analysis of buggy developers activity patterns in the Mozilla community. He is an active contributor and board member of CHAOSS (Community Health Analytics for Open Source Software). He is an active member and President at the InnerSource Commons Foundation.

Testing All the Way Around the DevOps Loop - February 22nd: 20:00 UTC We hear a lot of talk about “shift left” in testing. And, if you do an internet search for “DevOps loop”, you’re likely to see images that feature a “test” phase – sometimes on the left side, sometimes on the right. But software development is not linear, and testing is not a phase. Testing is an integral part of software development. Testing activities happen all the way around the infinite DevOps loop. Let’s look at examples of where testing happens, especially in the right-hand side of that DevOps loop. And, talk about who can and should engage in those activities.

Speaker: Lisa Crispin is an independent consultant, author and speaker based in Vermont, USA. Together with Janet Gregory, she co-authored Holistic Testing: Weave Quality Into Your Product; Agile Testing Condensed: A Brief Introduction; More Agile Testing: Learning Journeys for the Whole Team; and Agile Testing: A Practical Guide for Testers and Agile Teams; and the LiveLessons “Agile Testing Essentials” video course. She and Janet co-founded a training company offering two live courses world-wide: “Holistic Testing: Strategies for agile teams” and “Holistic Testing for Continuous Delivery”.

Lisa uses her long experience working as a tester on high-performing agile teams to help organizations assess and improve their quality and testing practices, and succeed with continuous delivery. She’s active in the DORA community of practice. Please visit lisacrispin.com, agiletester.ca, agiletestingfellow.com,, and linkedin.com/in/lisacrispin for details and contact information.

Metric Monday (1 hour): The discussion starts off with a brief presentation on metrics, then we use the lean coffee format for the remainder of the discussion. February 26th: 10:00 UTC & 20:00 UTC

Upcoming Conferences & Events from the community:

DevOpsDays

- Mar 13-14: Salt Lake City

- Mar 15: Los Angeles

- Mar 18-19: Kraków

- Mar 21-22: Singapore

- Apr 10-11: Raleigh

- Apr 16-17: Zurich

- Feb 15-16: DevOps.js

- Mar 18-20: SREcon Americas

- Apr 24-25: Enterprise Technology Leadership Summit Europe (Virtual)

In case you missed it:

Asynchronous Discussions:

- DORA - multiple applications

- DORA at Booking.com

- DORA success stories

- DORA vs SPACE vs DevEx

- The new DORA Quick Check

On the playlist: Catch-up on the latest community discussions on the DORA Community playlist:

DORA around the world:

Content & Events

- How booking.com doubled their team’s delivery performance within a year as measured by DORA metrics

- Measuring Developer Productivity: Real-World Examples

- How Airbnb measures developer productivity

- DORA, SPACE, and DevEx: Choosing the Right Framework

- Applying the SPACE Framework

- DORA Core: What Is It And How It Helps Organizations Navigate Through Changes

- Getting to the Root of Engineering Improvement with DORA Core

- DORA Metrics: What are they, and what's new in 2023?

- The DORA State of DevOps Report in 1 minute

- Waydev 2023 in Review: Revolutionizing the Software Engineering Intelligence Market with DX, - - DORA Metrics, and the SPACE Framework

- 55 Fascinating DevOps Statistics You NEED To Know In 2023

- What DevOps teams need to know for 2024

- How to Quantify the ROI of Platform Engineering

- Database Change management

- Implementing the research

- Deloitte's 2023 State of DevOps Report Webinar

- 0800-DEVOPS #54 - 2023 State of DevOps Report with Nathen Harvey - CROZ

- Key findings from the 2023 State of DevOps Report | Nathen Harvey (DORA at Google)

- Insights into the 2023 Accelerate State of DevOps Report

Thank you for your continued collaboration, we truly appreciate it!!

Smiles,

Amanda

Applying DORA in Your Context

January 25, 2024 by Amanda

Discussion Topic: Applying DORA in Your Context

The DORA Community Discussions start with a brief introduction talk, and then a discussion with the attendees using the Lean coffee .

Topics discussed:

What are your biggest challenges to adapt/apply DORA?

Context matters and trying to simply copy-and-paste DORA is rarely successful so how are your teams and organizations adapting and applying DORA?

From the community:

- Difficult to capture the data properly

- application-specific constraints and practices

- Understanding the "why". Why is it important for me, the engineer? Which is often a different question than "why is it important for my organization?"

- In the absence of information we create a narrative that best supports the information that we have.

- Very often, folks will use whatever you put on a dashboard... so start adding the healthier metrics to the top of your dashboard and move the questionable data to the bottom, or remove completely.

Do we need to get upper management to care about continuous improvement & tracking metrics for that? (and if so, how)

From the community:

- Draw upper management into the discussion with the goal of "innovation". Use innovation as a way to connect to "software delivery"

- Demonstration wins over conversation. Focus on little wins, keep iterating. Communicate the progress to upper management.

- Deming was committed to training the executives before practitioners. Deming’s Journey to Profound Knowledge

- "organizational scar tissue"....most process was put in place with good intentions but we often fail to revisit whether or not they're still helpful

What strategies have you used to encourage the change to come from your team / the people closest to the work?

From the community:

- Manage the message; try to measure "things" (capabilities, pipelines, streams, etc.) rather than "people" (teams, members, managers)

- Start by first identifying a problem and setting a goal, and then finding a way to measure progress towards the goal. Which means more buy-in on using the metric.

How do you (safely) introduce metrics without it feeling like everyone is under the microscope?

From the community:

- "The First thing to build is TRUST!"

- Use dashboards that only have charts for things that are used for decisions. If quality needs attention... you can subtly add the signal and the questions soon arise that give you the opportunity to say how they could proceed.

- Look at the data with your team, include them in the discussions, ensure they understand how the metrics will be used for good.

- Find metrics that showcase where the team is excelling, along with metrics that they can use to identify areas for improvement.

- Technology adoption life cycle

- The SPACE of Developer Productivity: There's more to it than you think.

- From Dave Farley's Modern Software Engineering book: "...why do we, as software developers, need to ask for permission to do a good job? We are the experts in software development, so we are best placed to understand what works and what doesn't."

- Build in slack time to allow the team to think about continuous improvement opportunities

- Gameify and celebrate the largest lessons learned over the biggest "winners"

Join the DORA Community google group to receive invitations to future DORA Community events.

Metrics Monday January 2024 - DORA Community Discussion

January 22, 2024 by Amanda

Discussion Topic: Metrics

The DORA Community Discussions start with a brief introduction talk, and then a discussion with the attendees using the Lean coffee.

Topics discussed:

How do you measure the success of features you add to your software?

From the community:

- Measure if the feature was a success - possible measures: net promoter score (NPS), Click throughs, Knowledge base, did this drop off, increase engagement

- Define success before developing the feature

- Did it make our lives better?

- Connect to analytics, connect to dashboards

- To show value of the feature , show it to the business

- Product Analytics can be quite useful, particularly if it's in the frontend space - often they can break down which buttons are being clicked, which individual features or experiments are being used, that were batched together.

- A/B testing is a nice approach to split up the multiple improvements, too... if you have the capability to do it.

- Feature flagging different audiences would allow more experiments concurrently, as long as you have sufficient volume... although the law of multivariate testing is expect most experiments to end up making no measurable impact

How are your teams defining and measuring failed changes?

From the community:

- Deployment - system is offline, but that misses crucial features are broken, even if the site is still up, also consider non-functional requirements - ex. the page load is taking longer than expected.

- When something needs to be remediated

- What if several teams are working on same product , deploying multiple features, make it very complicated

How to involve Product management in Engineering Metrics Discussion?

From the community:

- Embed the PM within the application team, integrated them, and include them in team discussions.

- By working on improving lead time for changes and/or cycle time as a team, the PM can eventually move away from building up a backlog of requests - this becomes possible when there is an efficient feedback cycle.

- Encourage them to discuss with their PM to break work into buckets, big wins,continuous improvement, service health, - deliberate discussion

Is there a metric that points towards the maintainability of the application? Or is it something we need to derive from multiple metrics?

From the community:

- Application health

- Code health

- Audience is important, the metrics need to be valuable to the audience

- Surveying the people that work on the system, their satisfaction

- Developers tends to say things are really bad with the code, but sometimes it is not true, but sometimes they overestimate

- Technical debt

- How often is the codebase changing?

- How much do the team like / not like the codebase

- How much revenue is it bringing in / are customers using it?

- How much test coverage do we have?

- How do you define bad code? Is it not how it is currently being written? Does that impact users?

- Build time, fixing production,

What's more important - the definition of your metric, or how consistently you measure it?

From the community:

- Yes! Capture and share the definition of the metric as well as a timeline for when to revisit its definition

Are SLOs the only way to add meaning to 'availability'/'uptime' metrics? How else do you do this?

From the community:

- SLO usually starts with using system metrics, such as cpu usage. With proper implementation it leads to using business metrics, such as bookings, and booking value.

What are good metrics for technical debt?

From the community:

- Technical debt usually has a drag factor, so you'd expect your DORA metrics to highlight when this is causing too much friction... either due to throughput reducing, or stability deteriorating.

- Establish a technical debt assessment

- Identify it, have plan to address it

Tips for summarizing comparisons? (eg. before/after)

From the community:

- Control Chart - Statistical Process Control Charts | ASQ

- Probability and Statistics Topics Index - Statistics How To

- A Beginner’s Guide to Control Charts

How do you communicate these metrics to leadership in a way that they will care or see the value in collecting them?

From the community:

- Comparing lack of business intelligence in software delivery with analytics journey maturity that the organization has in other areas, IT, sales, etc.

- Give context to the metrics whether it is going up or down

- Give insights, and make suggestions

- DORA's The ROI of DevOps Transformation report - might help leaders see the impact and possibilities that improved software delivery performance may have

- Have a hypothesis, "We believe X will result in Y and we will know this when

improves by X %"?

How precise do the metrics need to be?

From the community:

- Precise enough to support the decision you are trying to make or change you are trying to drive.

- Vitrina: a portfolio development kit for DevOps

Where to start to make improvements that will reflect in the key metrics?

From the community:

- The Value vs. Effort Matrix

- let's all work to achieve continuous delivery, and here are some measurements that may help guide you

- locus of control might help, too. An individual contributor might not be able to change how incentives work in the org but can change their practice of writing tests

When is it too early for a company/product to start tracking DORA metrics?

From the community:

- When the team does not want to work on continuous improvement

Avoiding weaponizing metrics i.e., pursuing a better number at any cost

From the community:

- "It's not a metrics problem, it's a culture problem"

- Weaponizing comes from the understanding that the metrics provide absolute values. In fact they provide relative ones against a reference that you make up.

- Setting a goal for all teams in the org to work for, and helping them find metrics that track progress to it, while carefully avoiding comparing each team's progress with other teams

Join the DORA Community google group to receive invitations to future DORA Community events.

Bottom-up or top-down? DORA Community Discussion

January 11, 2024 by Amanda

Discussion Topic: Focus for 2024

The DORA Community Discussions start with a brief introduction talk, and then a discussion with the attendees using the Lean coffee format.

Topics discussed:

Internal development platforms, bottom-up or top-down?

From the community:

- Build as a product that solves things people want solved, and not things they enjoy solving ∙

- recognize contributions to the platform

- consider "team topologies" to arrange different kinds of teams

- Team Topologies DORA Community presentation

- Platform as a lab - build cool features into the platform that might be good fits for the product

- "Find something they really hate and build from there" -- I had good support for building an internal dev platform for an embedded project by fixing roadblocks our internal IT team put up. For example, setting up CI infrastructure and giving devs access to a linux dev env.

- "...and don't take away the problems they like solving…"

Bring cloud costs in front of developers, and gating on a budget in a pipeline.

From the community:

- Cost control is a team activity. Make sure the team has a baseline level of understanding around how costs accumulate when using cloud services.

- It is all related to shifting left FinOps practice and making engineers accountable for their software development costs. It is a wide topic since cloud costs involves various aspects (Infrastructure, logs, etc) - visibility, working against goals is a good step. Empowering developers should start from leadership

- Concerns not only about the cost to the company, but the cost to the planet, using resources to run the cloud.

- Implementing ‘cloud cost management by-design' with platform engineering

- https://www.infracost.io/

Engineering Productivity vs/or DORA

From the community:

- How to empower software delivery teams

- Measure outcomes, the things that will happen if people are able to do their best work together.

- Using visuals like value stream maps gets people more engaged and thinking more laterally.

Where to start in a project/company with the worst DORA metrics?

From the community:

- DORA Core Model

- Start with what's most painful and expand. If it is quality and restoring service, focus on what can move the needle there. If it is developer agility, focus there based on the Dora model. Like any adoption and journey, start small and grow :)

How are you using AI particularly in development and what benefit, if any, are you seeing?

From the community:

- Learning about AI at Agile Testing Days 2023

- Key Benefits of Pairing Generative AI Tools with Developer Observability

Join the DORA Community google group to receive invitations to future DORA Community events.

DORA Community Blog

Community News, Event Recaps, and Community Shared Resources.